Audio engineer awarded funding to improve and personalize hearing devices and headphones

Hwan Shim is using technology to personalize audio experiences because human ears are all different.

Shim, an assistant professor of electrical and computer engineering technology, is developing new audio technology that could decrease extraneous sounds in noisy environments. This could improve applications from arts performances to personal devices such as hearing aids.

“We often make technology and equipment with ‘average’ ears in mind,” said Shim. “But what if we can personalize this technology when we wear certain headphones or other devices? What if we can add personal effects that can still be natural and more spatial? At RIT, we are pushing this field.”

Shim was recently awarded National Institutes of Health funding of nearly $750, 000 for “Semantic-based auditory attention decoder using large language models,” a project to determine how individuals distinguish sounds, and how the brain helps ‘censor’ sound that is non-essential to individuals, including those who need devices to improve hearing.

Addressing sensorineural hearing loss requires more than amplification, and some auditory devices such as cochlear implants, while advanced, continue to improve by differentiating speech from background noise, Shim explained.

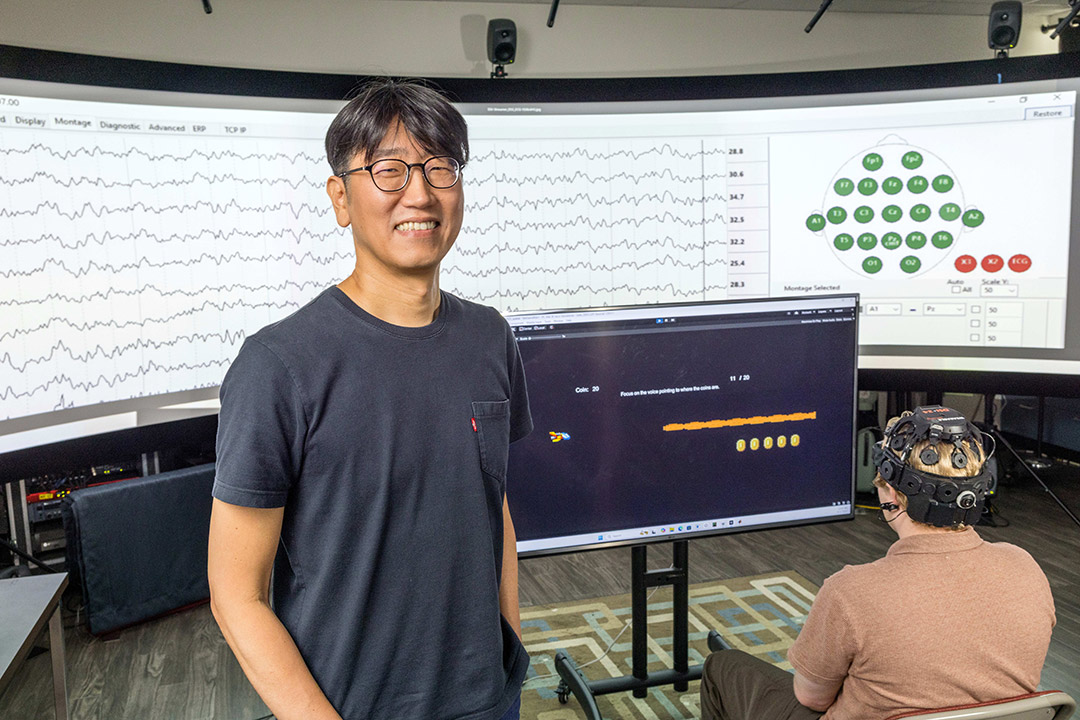

His project, which began in August, could advance this speech-in-noise perception by mimicking the brain’s ability to discern and decode auditory messaging and sounds. His research team will use machine learning and integrate large language models to determine EEG-based selective attention decoding to improve hearing devices.

Traci Westcott/RIT

Biomedical engineering undergraduate, Danny Teets, part of the audio engineering research team, wears a prototype EEG device to explore how the brain censors sound in noisy environments.

Today’s audio devices are sophisticated, but some underperform in noisy or distracting environments. To address these challenges, Shim and his research group in the Music and Audio Cognition Laboratory, based in RIT’s College of Engineering Technology, developed a prototype headset to provide neurofeedback information. The device is being used to record signals indicating how the brain recognizes needed information and separates out unwanted distractions.

Shim’s work leverages the acoustic enhancement technology called Active Field Control (AFC), an immersive sound technology developed by Yamaha Corp. and RIT engineering colleague Sungyoung Kim, which is used in live performance spaces, such as auditoriums, and in virtual spaces, including virtual reality and gaming systems. Shim’s early doctoral research focused on spatial impressions, including reflections and reverberation that underpin such technologies. Coupled with AFC technology, he continues to explore spatial audio in various contexts, and these systems allow his research team to recreate realistic, noisy environments to develop, and test individualized selective attention decoding techniques.

“The state of the industry is in immersive audio,” said Shim, who is an expert in applied acoustics, auditory cognition, and neuroscience, and who has industry experience as an engineer at Samsung. "Using field control processors, we can actively change the sound space, for example, during a live performance. We are also seeing changes in listening habits, and we are making better technology for immersive audio to give a more natural feeling of sound.”

Shim said that although today’s systems are maturing, there are still gaps and limitations to presenting realistic, although enhanced, sound.

Over the course of the project, the team will work to further three specific areas: improvements to their prototype device; expansion of the training program used to distinguish sounds; and refinements to analytical methods once data from the system are captured. Shim will also be exploring how individuals contextualize information, including sound, and how audio systems then incorporate these distinctions. This is an engineering challenge Shim sees as a natural evolution of audio technologies that have progressed from early recording devices to those with the most sophisticated noise-reducing functions.

“We know that people have their own experiences and intimacy with sound. They can perceive differences,” he said. “We also know that the technology can decode the target speech from noise. Our idea is to clarify meaning of language to be more context-based. We are still figuring out what is going on in the brain. This can be next generation technology.”

Latest All News

- RIT Dubai, SmartKable partnering to advance smart grid technologiesRIT Dubai is partnering with SmartKable Powerline Solutions, a New York-based company, and its Middle East and Africa arm SmartKable Equinova, to develop an AI solution that will enable a smarter electric grid. As part of the agreement, RIT Dubai takes on a small equity stake in SmartKable Equinova to allow for a long-term, beneficial collaboration for all parties involved. Faculty and students will collaborate with the company to advance smart grid technologies by integrating generative AI and predictive analytics into SmartKable’s Line Analyzer software. Students are heavily involved, with some doing capstone projects through Dubai’s Smart Energy Lab, and a postdoctoral student in Rochester who is providing architectural oversight and integration. Jinane Mounsef, chair of the electrical engineering and computing science department at RIT Dubai, who is one of the project’s leads, said the group is aiming to develop solutions that automate power assessment reporting with AI-driven insights, provide real-time smart notifications for grid reliability, enhance predictive capabilities to forecast failures and optimize planning, and support intelligent demand-supply control for resilient energy systems. “This initiative highlights how, together, academia and industry can transform challenges into opportunities for innovation and sustainable growth,” said Mounsef. SmartKable’s Line Ranger network measures line voltage, current, and related parameters on lines in real time. It can identify, track, and trace losses, separate commercial from technical issues, enable dynamic line rating, and predict the remaining lifetime of power lines. “We want to help the grid successfully evolve in spite of all the new electrification and items that are happening,” said Bill Collins, co-founder of SmartKable Powerline Solutions. “We ultimately want to turn the grid into something that is intelligently controlled by AI.” Collins explained that at the end of the project, the team expects that they will be able to start utilizing the solution commercially with customers. “The need for a smart, efficient, and predictable grid is a universal, global issue,” said Wojtek Bulatowicz, managing director of SmartKable Equinova. “This is why SmartKable now has its operations in the United Arab Emirates to serve the Middle East and Africa markets.” SmartKable is based in Skaneateles, N.Y., and already has deployed its Line Ranger devices around the world. The company has previously connected with RIT through the co-op program, and it was interested in partnering with Dubai after learning about the campus’s experts in engineering and AI. The agreement between the campus and the company is key to providing RIT students with the experience of real-life scenarios and the support to find solutions that can be used worldwide. For more information on the Dubai campus, go to the RIT Dubai website. More information on the Line Ranger Network can be found on the SmartKable Powerline Solutions website.

- NTID researchers work to incorporate accessible AED machines on campusIn emergencies, Automated External Defibrillators (AEDs) help restore life until first responders arrive by giving users auditory prompts to operate the machines. But audio prompts and descriptions are virtually useless for deaf and hard-of-hearing individuals who may need to use AEDs to help save a life. Researchers from RIT’s National Technical Institute for the Deaf have identified an AED that includes visual and text prompts, making it accessible for deaf and hard-of-hearing users and those not familiar with the English language. As a result, accessible AED machines are now available at four campus locations: the first floor of Lyndon Baines Johnson Hall, Student Development Center, Hugh L. Carey Hall, and in a student residence—Ellingson Hall Tower A. Remaining units throughout campus will be replaced with accessible AEDs at end of life. “It’s terrific that AED machines have become widely available, including at RIT, but I’m deaf and what if I was alone and needed to use it on someone?” said Elizabeth Ayers, senior lecturer in NTID’s Department of Science and Mathematics and a licensed clinical sonographer. “I can’t hear the auditory descriptors such as the beeps and other background sounds, and neither can the hundreds of other deaf and hard-of-hearing people on our campus. We knew that something needed to be done in order to make these lifesaving machines user-friendly for just about anyone here.” Ayers and Wendy Dannels, research associate professor; Tiffany Panko, executive director of NTID’s Deaf Hub; and alumnus Menna Nicola ’25 (human-centered computing) worked as a team to investigate FDA-approved devices that portrayed the most visually accurate instructions and imagery. Thirty-two devices from seven manufacturers were examined. Of these, three models were identified as meeting accessibility criteria. RIT selected one for replacement and deployment across campus, with the guidance of Christopher Knigga, director of Facilities Services and Sustainability at NTID, and Jody Nolan, RIT’s manager of Environmental Health and Safety. Nicola, an associate IT analyst at Dow Chemical, was a student when the research began. “I didn’t really know much about AEDs before this project began, but I was thrilled at the opportunity to conduct research that directs impacts me and other deaf people,” she said. “The most enjoyable part of the project for me was the result that led to finding a great match for an accessible AED for deaf and hard-of-hearing individuals.” According to Ayers, next steps include reaching out to other schools, agencies, and organizations focused on deaf and hard-of-hearing individuals to share their research on the accessible AEDs. “RIT was on board with installing these accessible AEDs based on our work,” said Ayers. “It’s our team’s responsibility to be sure that other organizations know the options that are available to them. And hopefully, to take this one step further, manufacturers will continue to work alongside deaf researchers to develop and continually innovate other healthcare products that are fully accessible.”

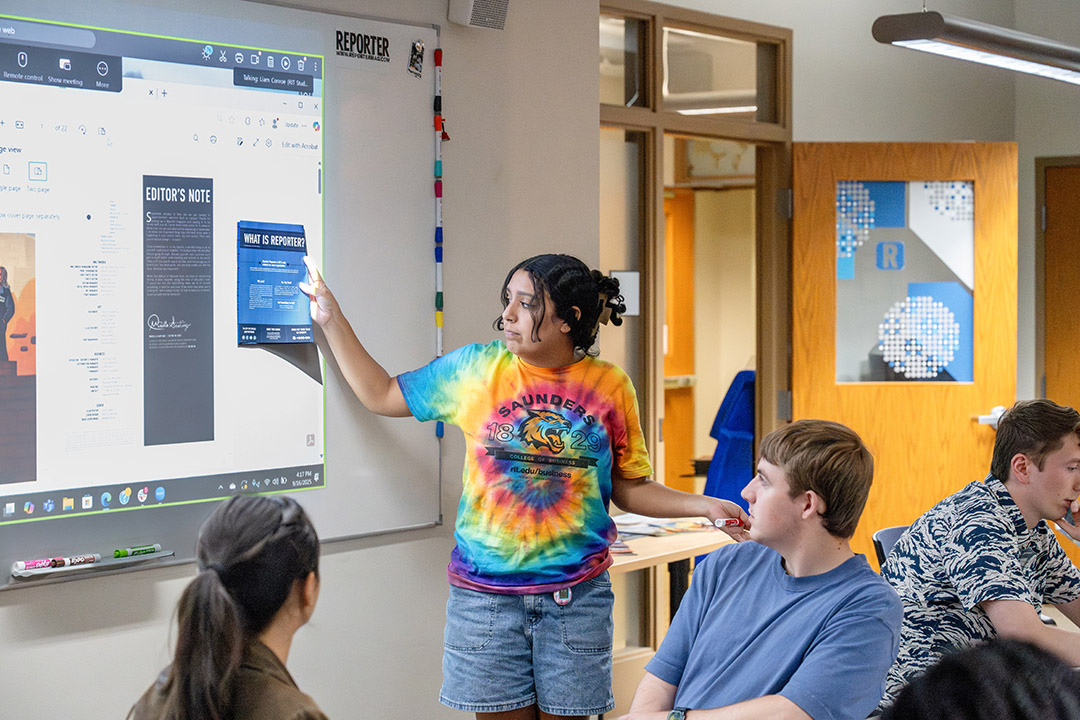

- ‘Reporter’ builds momentum as a platform for student voicesRIT’s student-run Reporter Magazine is working to returning to its roots as a primary information source by and for the RIT community. As it heads into its 75th year in 2026, Reporter has added frequent news updates online between its monthly print publication, has an editorial staff interested in communications and journalism, is seeing an interest in students wanting to join the staff, and is again winning awards for its work. Mariella Santiago, a fourth-year journalism major from Pittsford, N.Y., was elected editor-in-chief in January. “I was interested in making Reporter more of a journalism publication,” she said. “We have a more streamlined process of how we do our print publications, with cycles every month. We make sure we are published on time and people meet their deadlines. I’m really proud of this team with what they’ve done.” About 50 students with a variety of majors are on the staff and get paid for their work. Some are freelance writers or photographers, and some receive a stipend. “We’re always looking for more people to help out with our publication,” she said. The organization is editorially independent and student-run, with an advisory board that offers suggestions as needed. Some revenue is collected from advertising, but the bulk of funding is provided from RIT. All of the editorial content comes from the staff. The staff meets weekly for a newsroom brainstorming session at 5 p.m. each Thursday in its office, room A-730 in the basement of Campus Center. They hold organizational meetings at 4 p.m. Tuesdays. Any RIT student or employee is welcome to attend. “We talk about what’s happening nationally and globally, and try to relate those issues to RIT, and what’s trending on campus,” Santiago said. “We want to view issues from a student’s perspective.” There are 3,000 print copies distributed free each month around campus, and that content is also put online and on social media. Staff members have experimented with videos and podcasts and hope to provide photography and videography workshops to students. Tom Dooley, former program director for RIT’s journalism option, has been Reporter’s faculty adviser for three years. He said this staff has made “an intentional effort to really serve the student body. They take a serious look at what the people in this RIT community want to know about, and how they can deliver that to them.” Dooley said the staff has hired more visual journalists, more social media managers, rebuilt their website on a platform with more visual capabilities, and increased their presence on Instagram. “I think they are doing good work now and are taking the job of being a voice for the students seriously,” said Dooley, a former photojournalist, television producer, and filmmaker. “They know their role is important here at RIT to serve the student body through a perspective they can really connect with in an era of local news deserts, with fewer and fewer journalists covering local communities. Reporter is here to add value to students’ lives and occasionally check the administration. And that’s OK for that to happen.” Dooley is quick to say he’s not the staff’s boss and has no editorial say or prior review of what gets published, but he does sit in their planning meetings and supports them if they have any challenges or can help them in the creative process if they ask for guidance. One suggestion they followed was to create a manual for the staff with clear editorial guidelines and editorial ethics. Dooley has also traveled with several Reporter staff members to conferences by the Associated Press Collegiate Press in Minneapolis and College Media Association in New York City in the past three years to learn about best practices among other college publications. Reporter has won awards at the conferences. A 12-member advisory board, which includes faculty and administration members, communications professionals, and alumni who work in media, also meets with staff members periodically, providing guidance and feedback to the students. Dooley said he’s encouraged that some staff members are starting to collaborate with local professional organizations, getting co-ops as they learn journalism first-hand and even getting bylines. Liam Conroe, a journalism major from Jamestown, N.Y., serves as Reporter’s copy managing editor. He completed a co-op as a reporter for Rochester Beacon and hopes to possibly become a sportswriter after college. Noah Gallo, a second-year cybersecurity major from Danbury, Conn., joined the staff as a writer in March after a friend and Dooley, who taught a communication course he was in, suggested he join. He’s written two stories and two reviews since then. “While he doesn’t have a vision to become a career journalist, he says he can take aspects of what he’s learning at Reporter and apply them to his major. For the October edition, he’s writing a review of the videogame Cyberpunk 2077. “I never thought I would get to write an article about my favorite game,” Gallo said. “Just the fact I can and that people will read it is so cool to me.” Santiago, who completed an internship with News 10 (WHEC-TV) in Rochester, is gratified at the staff’s work and the product they are producing each month. “When I see it in print and see my editor’s note and my signature there, that is all worth all the work I put into it,” she said. “Seeing the final product and seeing people reading it around campus is always the most rewarding thing.”

- RIT faculty member and students create peace art installation at Woodstock siteThe rolling Bethel Woods hills that once hosted the iconic 1969 Woodstock music festival now holds a new architectural tribute to peace. The Peace Pavilion, designed and built with the leadership of RIT architecture Professor Amanda Reis, invites visitors to pause, reflect, and immerse themselves in the legacy of a site that continues to symbolize cultural change. Reis, in her second year as a faculty member in the Golisano Institute for Sustainability’s Department of Architecture, partnered with longtime collaborator Eduardo Aquino of the University of Manitoba to submit a proposal to BuildFest 2: Peace Rises, a part of the Bethel Woods Art & Architecture Festival. The event, held Sept. 10-14, called on university professors to create “peace structures” of varying scales. Their submission, entered under their research and design practice AREA, was selected for the largest commission. “It was a real honor to be selected,” Reis said. “We saw it as an opportunity to combine design innovation with sustainability and to involve students in a transformative learning experience.” Set against the backdrop of Filippini Pond and an adjoining forest, the pavilion occupies an Instagram-worthy location on the Bethel Woods grounds. The pavilion’s form was inspired by the yin-yang symbol, a representation of interconnected opposites and balance. Reis and Aquino reinterpreted it into a cubic structure that offers a cocoon-like feel, while also offering spectacular views. Youngjin Yi RIT architecture students gained hands-on experience assembling prefabricated panels for the Peace Pavilion. Those visiting the structure will step into a light-filled space whose walls are inscribed with phrases curated from poetry, literature, and music. Some lyrics also come directly from Woodstock-era artists, a connection to the area’s past. Western Red Cedar was chosen as the primary material because of its beauty and resilience. The material strengthens over time, meaning the Peace Pavilion could endure for years. “Many of the BuildFest structures are temporary, but we wanted to increase the longevity of the Peace Pavilion,” Reis said. “The material gives it that possibility.” Reis emphasized that the project’s success was the result of many minds. While Reis and Aquino established the design, the project became a hands-on experience for RIT architecture students. The project served as an early design-build exercise for Reis’s Architecture Studio course, a master’s architectural design class that typically emphasizes theoretical projects. The Peace Pavilion offered the students a rare chance to see ideas translated into reality. The student team included Noah Baldon, Maddy Bortle, Anna-Leigha Clarke, Ryan Denberg, Sydney Fox, Gabriela Hernandez, Gil Merod, Mackendra Nobes, Julia Resnick, and Youngjin Yi. “The collaboration exposed students to the full cycle of design-build, from concept to construction,” Aquino said. “They encountered unexpected challenges and learned how to improvise solutions during installation, which is where the deepest learning often happens.” In addition to the student team, staff from the SHED—Michael Buffalin, Jim Heaney, and Chris Vorndran—played a key role in fabricating the engraved cedar boards, before it made the four-hour trek to the Catskills. Ralph Gutierrez, an architectural intern, also contributed to the design development, while Yi captured photography throughout the process. “All of the dimensional wood was fabricated at 10-foot lengths to minimize waste, and the design required tight tolerances that pushed us to be meticulous during assembly,” Aquino said. “Those details emphasized the balance between sustainability and craftsmanship.” Youngjin Yi Inside the Peace Pavilion, engraved phrases line the cedar walls, which include lyrics from popular Woodstock performers. During the build, Reis mentioned that a couple who had attended Woodstock stopped by in their original festival T-shirts, eager to step inside the new structure. The pavilion offers potential for several outdoor activities. “I can imagine people reading, meditating, or just waiting for friends to finish kayaking or swimming nearby,” Reis said. “As architects, we can anticipate some uses, but the most exciting thing is what we can’t predict.” Fox, an architecture graduate student from southern Maine, said the entire process taught her important lessons. “I see architecture as a tool that can bring people together, communicate ideas and stories, and challenge the status quo,” said Fox. “Building this Peace Pavilion with others helped me see the power of collective effort and how creating something together can forge meaningful bonds between people, giving a project even more significance beyond its final result.”

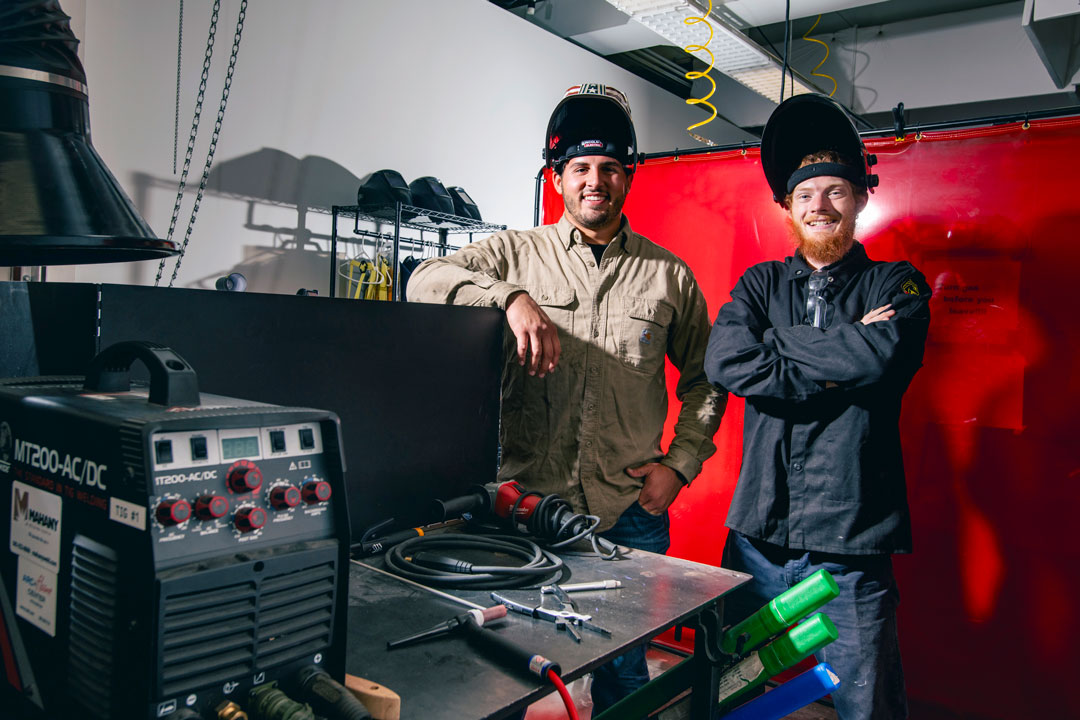

- Students weld together a new clubIt was a spark of an idea that forged a new campus club. A year ago, four engineering technology students were taking the class Foundation of Metals that included a brief training program for undergraduate students to learn how welding is used in manufacturing. They knew they wanted more than welding 101 and started to build the framework of a new club. “It was beneficial to have the class” said Jack Seeley, chapter president and a fourth-year manufacturing and mechanical engineering technology student from Cherry Valley, N.Y. “But we also wanted to learn more, to take advantage of the resources and equipment we have in our lab area.” It seemed his peers across campus did as well. Within a year, Seeley and the others worked with Student Government, RIT’s College of Engineering Technology (CET) administration and faculty member Richard Roe to establish the new organization. It became one of the first student chapters of the American Welding Society’s Northeast District. RIT’s chapter has 50-plus active members and open to any major and experience level. “We have a diverse team with a wide range of expertise. While many of us have an engineering technology background, our members also have backgrounds in game design, finance, art, mathematics and NTID,” said Lucio Tomassetti, chapter vice president, and a fifth-year manufacturing and mechanical engineering technology student from Fairport, N.Y. “We are working with the members from NTID to build our chapter in a way to make students who may be deaf or hard-of-hearing comfortable here.” Liam Myerow/RIT Members of the student chapter of the American Welding Society are learning how to weld for projects as varied as art to mechanical design. Welding is the art of using high heat and pressure to mold, shape and secure metals. Those new to the process can be intimidated at first, but the group merged RIT’s general lab safety rules with specific welding safety measures to formulate a unique training program for new members. “To enhance our training program, we’ve created a chief welder position. This person acts as lead trainer, with responsibilities that include ensuring lab safety, clean up, managing set up and teaching our next group of trainers,” said Tomassetti. Seeley agreed: “We are constantly trying to improve and retrain our members, especially the newer members with less experience. We can start them on smaller projects so that they can learn what welding sounds like, what it feels like, how the helmet reacts, how it feels to wear different types of gloves and getting that person to feel comfortable with welding.” The path to formalizing the student chapter was paved through help by alumnus Michael Krupnicki ’99 (MBA), a longtime supporter of RIT and its students, particularly those on the Baja, Formula and Hot Wheelz performance teams. Students learned how to weld at Arc & Flame, his business in Rochester. Krupnicki also provided much of the state-of-the-art equipment found in CET’s labs and in campus machine shops. The connection to Arc and Flame locally as well as the national society provide multiple opportunities for chapter members to participate in local, regional and national programming, including student design competitions. “For the current academic year, we are arranging a series of programs with local professionals. These sessions are designed to supplement our classroom learning and will cover a variety of practical skills, including designing with welding standards and fundamentals of business ownership,” said Tomassetti. Programs will focus on crucial skills, like improving interpersonal communication for project management and delivering effective presentations to clients. Seeley agreed: “These things can help students become successful welding engineers and more confident project leaders.”

- William H. Sanders installed as RIT’s 11th presidentRIT will become a destination not just for students but for ideas, Bill Sanders said as part of formal ceremonies to install him as RIT’s 11th president. The university, he said, will be a place where breakthroughs in artificial intelligence, sustainability, and cybersecurity are born; where artists and technologists co-author the future; that graduates leaders prepared for careers that don’t even exist yet; where interdisciplinary research tackles real-world problems; and where the global footprint expands while the commitment to Rochester is deepened. “None of what we aspire to do together can be done without passionate people,” Sanders said. “We have a beautiful campus and the buildings and Tiger Athletics facilities that we have built in the last five years are best in their class. But I believe fundamentally that people are what drives RIT.” Carlos Ortiz/RIT Andreas Cangellaris, founding president of NEOM University in Saudi Arabia, served as keynote speaker at the ceremony. Hundreds of people, including delegates from 42 universities and state and community leaders, attended the tradition-filled ceremony on Sept. 26 at Gordon Field House and Activities Center. Hundreds more watched online. In his inaugural remarks, Sanders announced that RIT has secured gifts to establish five new endowed professorships, adding to the 49 that exist today. He outlined progress on the development of RIT’s new strategic framework, which he calls a shared ambition to build a university that is more inclusive, innovative, and interconnected than ever before. “As we stand at the threshold of a new chapter, I see a university that is not only ready for the future—but ready to shape it,” he said. Sanders was officially installed as president by Susan Puglia, chair of the RIT Board of Trustees, and vice chairs Susan Holliday ’85 (MBA) and Frank Sklarsky ’78 (business administration accounting). They presented him with the Presidential Collar of Authority, created in 1983 by the late Hans Christensen, the first Charlotte Fredericks Mowris Professor of Contemporary Crafts in the School for American Crafts. Andreas Cangellaris, founding president of NEOM University in Saudi Arabia, served as keynote speaker at the ceremony. Cangellaris and Sanders worked together at the University of Arizona and the University of Illinois at Urbana-Champaign. Cangellaris said Sanders is a natural leader who encourages cross-disciplinary collaboration and builds trust. Elizabeth Lamark Bill Sanders delivers remarks at the ceremony marking his inauguration as 11th president of RIT. Those traits, he said, are especially valuable now because of the potential and promise of artificial intelligence. Universities must rethink how they teach and learn, research and discover, and inspire and innovate. “To meet the moment, we need leaders who are wise, courageous, visionary, collaborative, and inspiring — leaders we can trust to bring the university community and all its stakeholders together, to comprehend the challenges and propose and advance the right path forward,” he said. “Bill Sanders is that leader, for this very moment.” Inauguration events also included faculty presentations, a panel on the future of higher education, campus tours, and a picnic with students. Sanders has nearly 40 years of experience in higher education, having most recently served as the Dr. William D. and Nancy W. Strecker Dean of the College of Engineering at Carnegie Mellon University in Pittsburgh from 2020 to 2025. He started at RIT on July 1. Read the full text of President Sanders’s inauguration remarks.